组网说明:

hadoop1.localdomain 192.168.11.81(namenode)

hadoop2.localdomain 192.168.11.82(namenode)

hadoop3.localdomain 192.168.11.83(datanode)

hadoop4.localdomain 192.168.11.84(datanode)

hadoop5.localdomain 192.168.11.85(datanode)

第一步:卸载openjdk

rpm -qa |grep java

#查看已经安装的与java相关的包

rpm -e java-1.7.0-openjdk-1.7.0.45-2.4.3.3.el6.x86_64

rpm -e --nodeps java-1.6.0-openjdk-1.6.0.0-1.66.1.13.0.el6.x86_64

rpm -e tzdata-java-2013g-1.el6.noarch

#################################################################

第二步:禁用IPV6

echo net.ipv6.conf.all.disable_ipv6=1 >>/etc/sysctl.conf

echo "alias net-pf-10 off" >>/etc/modprobe.d/dist.conf

echo "alias ipv6 off" >>/etc/modprobe.d/dist.conf

reboot

##########################################################

第三步:解压hadoop压缩包

把压缩包导入到/usr目录

cd /usr

tar -zxvf hadoop--642.4.0.tar.gz

把目录重命名为hadoop

mv hadoop--642.4.0 hadoop

安装jdk1.7,把安装包导入到/usr目录

cd /usr

rpm -ivh jdk-7u71-linux-x64.rpm

####################################################################

第四步:设置JAVA&HADOOP环境变量

vi /etc/profile

#set java environment

export JAVA_HOME=/usr/java/jdk1.7.0_71/

export HADOOP_PREFIX=/usr/hadoop/

export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$PATH:$JAVA_HOME/bin

#set hadoop environment

export HADOOP_HOME=/usr/hadoop/

export PATH=$HADOOP_HOME/bin:$PATH:$HADOOP_HOME/sbin/

#使配置生效

source /etc/profile

或者重启服务器,也会重新加载该配置

################################################

第五步:创建hdfs需要的目录

mkdir -p /home/hadoop/dfs/name

mkdir -p /home/hadoop/dfs/data

##############################

第六步:java enviromnet追加到hadoop环境变量配置文件中

echo export JAVA_HOME=/usr/java/jdk1.7.0_71/ >>/usr/hadoop/etc/hadoop/hadoop-env.sh

echo export JAVA_HOME=/usr/java/jdk1.7.0_71/ >>/usr/hadoop/etc/hadoop/yarn-env.sh

####################################################################################

第七步:免ssh key,可以只在namenode上,因为一般情况下,由namenode控制datanode

略,查看相关配置手册

####################

第八步:编辑datanode配置文件,只在namenode上

[root@hadoop1 hadoop]# pwd

/usr/hadoop/etc/hadoop

[root@hadoop1 hadoop]# more slaves

hadoop3.localdomain

hadoop4.localdomain

hadoop5.localdomain

########################################

[root@hadoop1 hadoop]# more core-site.xml

#

#

#

#

#

#

###########################################

hdfs-site.xml

###############################

#################################

mapred-site.xml

###########################

yarn-site.xml

#############################

1、在hadoop1上,把hadoop所在文件夹拷贝到其他节点上,不用更改配置

scp /usr/hadoop hadoop2:/usr/

scp /usr/hadoop hadoop3:/usr/

scp /usr/hadoop hadoop4:/usr/

scp /usr/hadoop hadoop5:/usr/

2、格式化namenode,两个namenode都要格式化,切clusterid

hdfs namenode -format -clusterId MyHadoopCluster

MyHadoopCluster是以字符串的形式

3、每次格式化namenode之前,要删除缓存

rm -rf /home/hadoop/dfs/data/*

rm -rf /home/hadoop/dfs/name/*

4、

开启

start-all.sh

关闭

stop-all.sh

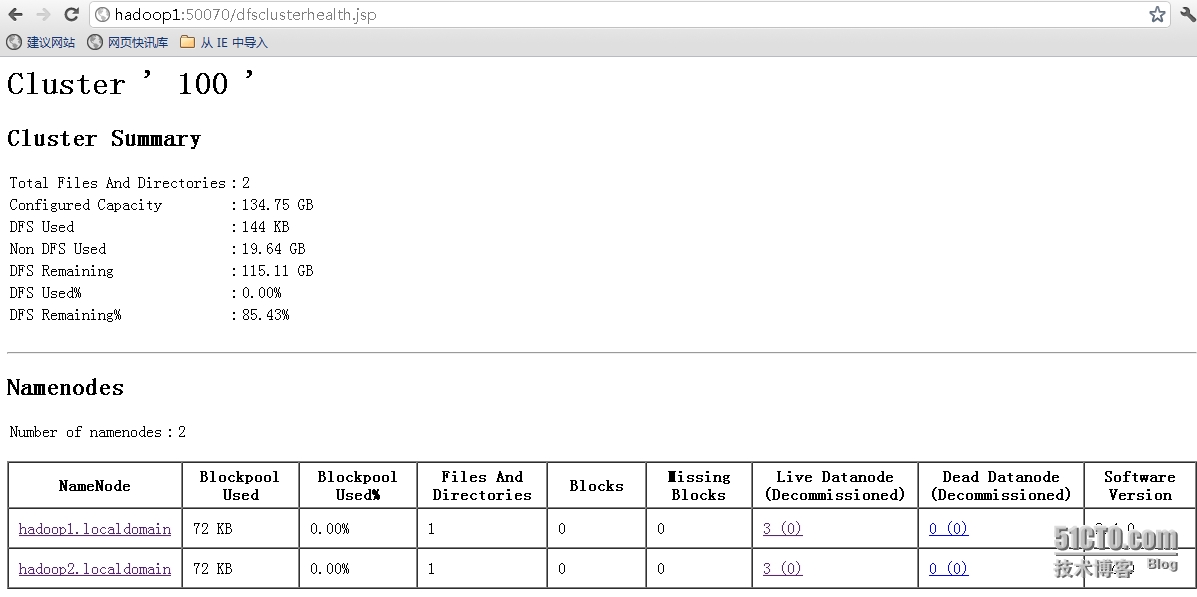

访问方式:

http://hadoop1.localdomain:50070/dfsclusterhealth.jsp

http://hadoop1.localdomain:50070/dfshealth.jsp

http://hadoop1.localdomain:50070/dfshealth.html#tab-overview

http://hadoop1.localdomain:8088/cluster/nodes

参考阅读:

http://hadoop.apache.org/docs/r2.2.0/hadoop-project-dist/hadoop-hdfs/Federation.html

http://zh.hortonworks.com/blog/an-introduction-to-hdfs-federation/